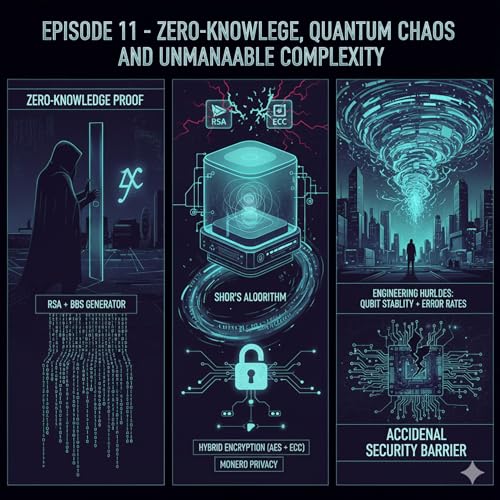

This episode dives into advanced cryptography and the foundations of digital security, starting with the counter-intuitive concept of Zero-Knowledge Proofs (ZKPs), which allow a system to prove a fact, like a valid request or knowing a password, without revealing the sensitive underlying data. The core idea of ZKPs and blind signatures is to establish mathematically verified trust by proving adherence to a protocol without disclosing the private content being signed or authenticated. However, a cryptographic proof only offers relative truth, guaranteeing that breaking the cipher is as hard as solving a recognized mathematical hard problem, such as factoring large numbers, but only guaranteeing security against one specific attack vector. For instance, the security of RSA relies on the difficulty of factoring, which necessitates a constant increase in key sizes to stay ahead of computational advances.

To mitigate risks like statistical analysis or comparison attacks, modern cryptography mandates probabilistic encryption (PE), which introduces randomness during encryption so that the same message produces a different ciphertext every time, thus avoiding information leakage. The security of these PE schemes is often tied back to the difficulty of factoring, particularly in designs that use the Blum-Blum-Shub (BBS) generator to produce the necessary random bits. For efficiency, common practice relies on hybrid encryption systems, using fast symmetric ciphers like AES for the bulk of the message and slower, but powerful, asymmetric ciphers like ECC (Elliptic Curve Cryptography) only to encrypt the small session key—a technique often employed in decentralized systems and privacy coins like Monero.

The ultimate threat to this entire system is Shor's algorithm, which, if run on a sufficiently large quantum computer, would break all current public-key cryptography (RSA, ECC, Diffie-Hellman) due to its exponential speedup for factoring and discrete logarithm problems. However, the immediate quantum threat is paradoxically constrained by massive engineering hurdles—specifically, the challenge of building a sufficiently large, stable quantum computer due to the extreme fragility and high error rates of quantum bits (qubits). For the foreseeable future, the required exponential increase in scale and complexity needed to build a cryptanalytic quantum computer acts as an accidental barrier to security.

36 mins

36 mins 28 mins

28 mins 41 mins

41 mins 40 mins

40 mins 36 mins

36 mins 33 mins

33 mins 27 mins

27 mins 33 mins

33 mins